Business

See How The Nations Are Grappling with the Unpredictable Dangers of AI

Published

2 years agoon

In April 2021, the European Union leaders proudly presented a 125-page draft law, the A.I. Act, as a global benchmark for regulating artificial intelligence (AI). However, the landscape changed dramatically with the emergence of ChatGPT, a remarkably human-like chatbot, revealing unforeseen challenges not addressed in the initial draft. The ensuing debate and chaos underscore a critical issue: nations are losing the global race to tackle the dangers of AI.

The Unforeseen Challenge: ChatGPT’s Impact

European policymakers were blindsided by ChatGPT, an AI system that generated its own responses and demonstrated the rapid evolution of AI technology. The draft law did not anticipate the type of AI that powered ChatGPT, leading to frantic efforts to address the regulatory gap. This incident highlights the fundamental mismatch between the speed of AI advancements and the ability of lawmakers to keep pace.

Global Responses to AI Harms

Around the world, nations are grappling with how to regulate AI and prevent its potential harms. President Biden issued an executive order on AI’s national security effects, while Japan and China are crafting guidelines and imposing restrictions, respectively. The UK believes existing laws are sufficient, and Saudi Arabia and the UAE are investing heavily in AI research. However, the lack of a unified global approach has resulted in a sprawling, fragmented response.

Europe’s Struggle and A.I. Act

Even in Europe, known for aggressive tech regulation, policymakers are struggling to keep up. The A.I. Act, despite its touted benefits, is mired in disputes over how to handle the latest AI systems. The final agreement, expected soon, may impose restrictions on risky uses of AI but faces uncertainty in enforcement and a delayed implementation timeline.

AI’s Rapid Evolution and Regulatory Challenges

The core issue lies in the rapid and unpredictable evolution of AI systems, surpassing the ability of regulators to formulate effective laws. Governments worldwide face a knowledge deficit in understanding AI, compounded by bureaucratic complexities and concerns that stringent regulations might stifle the technology’s potential benefits.

Also Read: Elon Musk Optimistic as Tesla Cybertruck Set for Highly Anticipated Launch

Industry Self-Policing Amid Regulatory Vacuum

With the absence of comprehensive rules, major tech companies like Google, Meta, Microsoft, and OpenAI find themselves in a regulatory vacuum, left to police their AI development practices. The preference for nonbinding codes of conduct, aimed at accelerating AI development, has led to lobbying efforts to soften proposed regulations, creating a divide between governments and tech giants.

Urgency for Global Collaboration

The urgency to address AI’s risks stems from the fear that governments are ill-equipped to handle and mitigate potential harms. The lack of a cohesive global approach could leave nations lagging behind AI makers and their breakthroughs. The crucial question remains: Can governments regulate this technology effectively?

Europe’s Initial Lead and Subsequent Challenges

Europe initially took the lead with the A.I. Act, focusing on high-risk uses of AI. However, the emergence of general-purpose AI models like ChatGPT exposed blind spots in the legislation. Divisions persist among EU officials on how to respond, with debates over new rules, and concerns about hindering domestic tech startups.

Washington’s Awakening to AI Realities

In Washington, policymakers, previously lacking in tech expertise, are now scrambling to understand and regulate AI. Industry experts, including those from Microsoft, Google, and OpenAI, are playing a crucial role in educating lawmakers. Collaborative efforts between the government and tech giants are seen as essential, given the increasing dependence on their expertise.

International Collaborations and Setbacks

Efforts to collaborate internationally on AI regulation have faced setbacks. Promised shared codes of conduct between Europe and the United States have yet to materialize. The lack of progress underscores the challenges of achieving a unified approach amid economic competition and geopolitical tensions.

Also Read: Sam Altman Chaos : The Unprecedented Reversal at OpenAI Sparks Industry Reflection

Future Prospects and the Need for Unified Action

As nations grapple with the complexities of AI regulation, the future remains uncertain. The recent A.I. safety summit in Britain, featuring global leaders, highlighted the transformative potential and catastrophic risks of AI. However, the consensus is elusive, and the urgency for a unified global approach persists.

Conclusion: Navigating the Complex Terrain of AI Regulation

In the global race to tackle the dangers of AI, nations find themselves navigating a complex and rapidly evolving terrain. The unforeseen challenges posed by advanced AI systems like ChatGPT underscore the pressing need for unified action, collaboration between governments and tech industry leaders, and a regulatory framework that balances innovation with safeguards against the potential dangers of AI. As the world grapples with the future of AI, the race continues, and the stakes have never been higher.

Sahil Sachdeva is an International award-winning serial entrepreneur and founder of Level Up PR. With an unmatched reputation in the PR industry, Sahil builds elite personal brands by securing placements in top-tier press, podcasts, and TV to increase brand exposure, revenue growth, and talent retention. His charismatic and results-driven approach has made him a go-to expert for businesses looking to take their branding to the next level.

Business

How to Get Featured in Huffington Post: A Complete Guide for Aspiring Writers and Brands

Published

23 hours agoon

February 24, 2026

In today’s digital age, being featured in a prestigious publication can be a game-changer for your personal brand or business. Among the most influential media outlets, Huffington Post stands out as a platform that can amplify your reach exponentially. But how do you get noticed by their editorial team? In this guide, we’ll explore actionable strategies to help you get featured in Huffington Post while maximizing your online visibility and authority.

Why You Should Aim to Get Featured in Huffington Post

The Huffington Post has built a reputation as a trusted source for news, opinion pieces, and lifestyle content. Being featured here isn’t just about bragging rights—it’s about credibility. When your content appears on HuffPost, it signals to your audience that your insights are valuable, trustworthy, and worth sharing.

Moreover, exposure on a platform with millions of monthly visitors can drive significant traffic to your website or social media channels. Whether you’re an entrepreneur, influencer, or thought leader, this kind of publicity can accelerate growth in ways that other marketing tactics may not.

Understanding the Content That Gets Featured in Huffington Post

Before you even think about pitching, it’s crucial to understand the type of content HuffPost publishes. The publication looks for articles that are:

- Newsworthy – Topics that are timely, relevant, and have a broad appeal.

- Engaging – Stories that captivate readers, spark conversation, or inspire action.

- Authentic – Personal narratives and unique perspectives often outperform generic content.

- Well-Researched – Credible sources, data, and insightful commentary make your submission stronger.

Knowing this helps you craft pitches or articles that align with HuffPost’s editorial standards, improving your chances of getting accepted.

Step-by-Step Guide to Get Featured in Huffington Post

- Identify the Right Section

HuffPost has multiple sections ranging from lifestyle and health to business and politics. Research which section aligns best with your expertise. Tailoring your pitch to a specific editor or section demonstrates that you’ve done your homework and increases your chances of being noticed.

- Build a Strong Online Presence

Editors are more likely to feature contributors who already have a professional online presence. Maintain an active blog, LinkedIn profile, or social media accounts where you showcase your knowledge. This not only demonstrates credibility but also ensures that readers can find and engage with your work beyond HuffPost.

- Craft a Compelling Pitch

When reaching out to HuffPost editors, your pitch should be concise, persuasive, and tailored to the publication’s audience. A strong pitch includes:

- A catchy subject line that grabs attention.

- A clear explanation of your article idea and why it matters.

- A brief background about yourself establishing authority on the topic.

- Links to previous work or relevant articles to showcase your writing style.

Avoid generic or mass emails. Personalized pitches show that you value the editor’s time and understand their readership.

- Leverage Guest Blogging Opportunities

HuffPost previously allowed contributors to register and submit articles directly, but in recent years, the process has become more selective. Partnering with sites that have existing relationships with HuffPost can open doors. High-quality guest posts on reputable sites can attract editor attention and pave the way to get featured in Huffington Post.

- Network with Industry Influencers

Networking is often overlooked but highly effective. Attend conferences, webinars, and industry events where editors or contributors might be present. Engaging meaningfully with professionals in your niche increases your visibility and makes it easier for your pitch to land successfully.

- Optimize Your Content for SEO

Even when your goal is to be featured on a major publication, your content should still be optimized for search engines. Using relevant keywords naturally, structuring your article with subheadings, and including meta descriptions all enhance the discoverability of your piece. Editors appreciate contributors who understand both storytelling and digital marketing.

Common Mistakes to Avoid

While aiming to get featured in Huffington Post, avoid these pitfalls:

- Being too promotional – HuffPost favors informative and insightful content over blatant marketing pitches.

- Ignoring submission guidelines – Each section has specific requirements; ignoring them reduces your chances significantly.

- Sending generic pitches – Editors are busy; a generic message is often deleted without a second glance.

- Neglecting editing – Poor grammar or lack of proofreading can overshadow even the most brilliant idea.

By steering clear of these mistakes, you position yourself as a professional contributor worth the editor’s time.

Benefits Beyond Publication

Getting published on HuffPost isn’t just a one-time win. The exposure brings long-term benefits:

- Increased credibility – Your name becomes associated with a leading media outlet.

- Higher search rankings – Backlinks from HuffPost can improve your website’s SEO.

- Networking opportunities – Connections with other writers, editors, and influencers in your field.

- Business growth – More visibility can lead to collaborations, partnerships, and client inquiries.

These advantages make the effort to get featured in Huffington Post more than worthwhile.

Final Thoughts: How to Get Featured in Huffington Post & Boost Your Reach

The journey to being featured in HuffPost is competitive but achievable. By understanding the editorial focus, crafting a compelling pitch, maintaining a professional online presence, and leveraging networking opportunities, you significantly improve your chances. Remember, persistence matters—rejection is not a failure but a learning step toward eventual success.

Publishing on HuffPost can transform your visibility and authority in your niche. Every well-prepared pitch, insightful article, or thoughtful connection brings you closer to achieving that coveted feature. With dedication, strategy, and a clear understanding of what editors are looking for, your story could soon reach millions of readers around the globe.

Your journey starts today—refine your pitch, polish your content, and take the steps necessary to get featured in Huffington Post. The opportunity to amplify your voice and grow your influence is within reach.

Business

Stop Building Features. Start Building Infrastructure.

Published

1 day agoon

February 24, 2026

Most founders build features.

The best founders build infrastructure.

Before Banu, we were operators. Our team closed over $600M in commercial real estate transactions and financed more than $100M in deals. We weren’t trying to build software.

We were trying to fix our workflow.

The real bottleneck wasn’t capital.

It wasn’t underwriting.

It wasn’t a competition.

It was reach.

Outbound Determines Growth

Modern sales stacks exploded into dozens of tools:

- Data vendors.

- CRMs.

- Email platforms.

- SMS systems.

- Dialers.

- AI add-ons.

Feature inflation created fragmentation.

Fragmentation created inefficiency.

Inefficiency created revenue drag.

So instead of building another feature, we built infrastructure.

Banu combines:

- 185M+ verified contact records

- Nationwide property and business owner data

- Built-in CRM

- Integrated email, SMS, phone, and direct mail

- Unified automation engine

- Zero integrations required

This is not a lead tool.

It is a system of record for outbound.

The Bigger Opportunity

More than 5 million professionals across real estate, mortgage, insurance, and financial services rely on outbound to survive and grow.

If you increase productivity per professional even marginally, the economic impact is enormous.

Infrastructure scales.

Fragmentation stalls.

When you consolidate the stack, you don’t just simplify the workflow.

You increase velocity.

Velocity compounds into revenue.

Revenue compounds into leverage.

The companies that win the next decade won’t build more tools.

They’ll rebuild the foundation.

Banu is free to try at www.banu.ai.

Business

How to Get Featured in The Guardian: A Practical Guide to Earning National Media Coverage

Published

2 days agoon

February 23, 2026

If you’re an entrepreneur, founder, author, or marketing professional, you’ve probably wondered how to Get Featured in The Guardian and other top-tier publications. National media coverage isn’t just about vanity—it builds credibility, drives traffic, attracts partnerships, and positions you as a trusted authority in your industry.

But landing coverage in a globally respected newspaper doesn’t happen by accident. It requires strategy, timing, and a strong understanding of what journalists actually want. In this guide, you’ll learn actionable steps you can take to dramatically improve your chances of securing meaningful press coverage.

Why Get Featured in The Guardian Matters for Your Brand

Securing a feature in a major publication instantly elevates your reputation. When you get quoted or profiled in a respected outlet, your audience perceives you differently. You’re no longer just promoting yourself—you’ve been validated by an independent and credible source.

Here’s why media coverage at this level is so powerful:

- Instant Credibility and Authority

Being associated with a trusted newspaper enhances your brand image overnight. Potential clients, investors, and partners are more likely to take you seriously.

- High-Quality Traffic

Online articles can drive thousands of readers to your website. Unlike paid ads, this traffic often converts better because it comes from trust-based exposure.

- Long-Term SEO Benefits

Media backlinks from authoritative domains significantly improve your search engine rankings. Over time, this strengthens your overall digital presence.

- Social Proof That Compounds

Once you’ve been featured, you can showcase it on your website, social media, sales pages, and email signatures—amplifying its impact for years.

When done strategically, the decision to pursue media coverage becomes a long-term brand investment, not just a publicity stunt.

Proven Strategies to Get Featured in The Guardian

If you want to Get Featured in The Guardian, you need more than a good product or service. Journalists are not looking for advertisements—they’re looking for compelling stories that resonate with their readers.

Here are proven strategies that increase your chances of success:

- Develop a Strong News Angle

Reporters care about what’s new, relevant, or timely. Ask yourself:

- Is there a trend you’re tapping into?

- Do you have data or research that supports a bigger narrative?

- Are you responding to a current issue or public debate?

A strong angle connects your expertise to a broader social, economic, or cultural conversation.

- Understand the Right Section

Before pitching, research where your story fits. Is it business, technology, lifestyle, opinion, or environment? Study recent articles in that section and look at:

- Writing tone

- Story structure

- Types of sources quoted

- Headline style

If you want to Get Featured in The Guardian, your pitch must feel like a natural extension of content they already publish.

- Craft a Personalized Pitch

Avoid mass emails. Journalists can spot generic pitches immediately.

A strong pitch should include:

- A compelling subject line

- A concise explanation of why the story matters now

- Supporting data or unique insights

- A short bio establishing credibility

Keep it clear and direct. Long-winded emails often get ignored.

- Offer Real Value, Not Promotion

One of the biggest mistakes people make is pitching themselves instead of pitching a story. Your goal is not to sell—it’s to inform, educate, or inspire readers.

Provide:

- Original research

- Contrarian viewpoints

- Expert commentary

- Case studies or personal experiences

Journalists are more likely to respond when they see genuine value for their audience.

- Build Relationships Before You Pitch

Engage with journalists on social media. Share their articles. Leave thoughtful comments. Show that you understand their work.

Relationship-building increases familiarity, and familiarity increases response rates.

Common Mistakes to Avoid When Trying to Get Featured in The Guardian

Even highly successful professionals struggle with media outreach because they make avoidable errors. If you’re serious about your PR strategy, watch out for these pitfalls.

- Making It All About You

Editors are focused on readers. If your pitch centers solely on your achievements, it’s unlikely to gain traction. Shift the narrative from “Look at me” to “Here’s why this matters.”

- Ignoring Timing

Timing can make or break your story. A pitch tied to a trending topic or current event has a much higher chance of being accepted.

For example, launching a sustainability initiative is more compelling if it aligns with global climate discussions or policy updates.

- Sending Attachments Without Context

Never attach large files without explanation. Instead, summarize your story in the email and offer additional materials upon request.

- Giving Up Too Soon

Media outreach is a numbers game. One rejection doesn’t mean your story isn’t strong. Sometimes it’s about timing, editorial calendars, or competing priorities.

Persistence—without being pushy—is key.

Long-Term Benefits When You Get Featured in The Guardian

The real power of media coverage unfolds over time. When you successfully Get Featured in The Guardian, the impact extends far beyond the initial publication date.

- Speaking Opportunities

Event organizers and conference hosts often look for speakers who have credible media exposure. A feature can open doors to keynote invitations and panel discussions.

- Partnership Requests

Brands prefer collaborating with businesses that have established authority. Media recognition signals trustworthiness and influence.

- Increased Investor Confidence

For startups, press coverage can improve investor perception. It demonstrates traction, relevance, and public interest.

- Enhanced Personal Brand

Whether you’re a founder or a thought leader, being featured strengthens your positioning. It separates you from competitors and builds long-term recognition.

Over time, one strong feature can lead to additional coverage in other publications, creating a ripple effect across your industry.

How to Strengthen Your Chances Even Further

While pitching directly can work, you can also improve your success rate through:

- Hiring a PR professional with media connections

- Publishing high-quality thought leadership on your own platform

- Building a strong LinkedIn presence

- Creating original research reports journalists can reference

Consistency matters. Media visibility is often the result of sustained effort rather than a single email.

Additionally, make sure your website and social channels are polished before pitching. If a journalist researches you, your digital presence should reinforce your credibility.

Final Thoughts: Turning Media Coverage into Momentum

Many professionals dream of national press coverage, but only a few approach it strategically. If your goal is to Get Featured in The Guardian, focus on delivering timely, relevant, and reader-centered stories rather than self-promotion.

Remember:

- Lead with value.

- Support your pitch with data.

- Personalize every outreach effort.

- Be patient and persistent.

Media success is rarely instant—but when it happens, it can transform your brand authority, search visibility, and growth trajectory.

Start by refining your story today. With the right angle and approach, your next email pitch could be the one that earns you a powerful and career-defining feature.

Business

Elevating Your Industry Authority: A Guide to Get Published in The Real Deal

Published

6 days agoon

February 19, 2026

In the high-stakes ecosystem of real estate, visibility is more than just a marketing metric—it is the ultimate form of professional currency. For developers, brokers, and PropTech innovators navigating the skylines of New York, Miami, or Los Angeles, there is one publication that serves as the definitive arbiter of success. It is the “Bible” of the trade, a journalistic powerhouse that dictates market sentiment and records the industry’s most pivotal moments. Naturally, for any ambitious professional, the ultimate milestone is to get published in The Real Deal.

However, landing a spot in these prestigious pages requires more than a standard press release. It demands a sophisticated understanding of the publication’s DNA and a commitment to providing high-level value to a discerning audience.

The Strategic Advantage to Get Published in The Real Deal

The Real Deal (TRD) is not a typical trade magazine; it is an investigative juggernaut that blends hard-hitting journalism with granular, data-driven insights. When you get published in The Real Deal, you aren’t just reaching a general audience; you are speaking directly to institutional investors, private equity titans, and the policymakers who shape urban development.

The benefits of a feature or a well-placed expert quote include:

- Institutional Credibility: A mention in TRD acts as a “seal of approval” from the industry’s most rigorous editors.

- Networking Leverage: High-profile features often lead to direct inquiries from potential partners and high-net-worth clients.

- SEO Dominance: TRD’s massive domain authority ensures that any mention of your name or firm will dominate search engine results for years to come.

Mastering the Editorial Pitch to Get Published in The Real Deal

Success in the media landscape is rarely accidental. To get published in The Real Deal, you must approach their editorial team with a “news-first” mindset. TRD reporters are famously diligent; they are not looking for promotional fluff or “advertorial” content. They are looking for the “why” behind the market trends.

- Lead with Proprietary Data

TRD has an insatiable appetite for numbers. Whether it is a breakdown of price-per-square-foot trends in a revitalizing neighborhood or an analysis of CMBS loan delinquencies, data is the fastest way to an editor’s heart. If you want to get published in The Real Deal, ensure your pitch is backed by unique research or a statistical angle that hasn’t been explored by competitors.

- Focus on “The Big Play”

While small residential closings are the lifeblood of many agencies, they rarely make the cut at TRD unless there is a celebrity angle or a record-shattering price point. Focus your outreach on large-scale acquisitions, significant legislative shifts, or innovative technological disruptions that have implications for the broader market.

Building Lasting Relationships to Get Published in The Real Deal

Publicity is a marathon, not a sprint. You don’t always need a massive “breaking news” story to start a dialogue with the editorial staff. Sometimes, the most effective way to get published in The Real Deal is to offer yourself as a reliable, objective source of information.

Pro Tip: Identify the specific “beat” reporters who cover your niche—be it retail, industrial, or luxury residential—and offer to provide “on-the-ground” context for stories they are already developing. By becoming a “source of record,” you increase the likelihood of being featured in future investigative deep dives.

Furthermore, pay close attention to the “Daily Dirt” and other TRD newsletters. Understanding what the editors are prioritizing on a daily basis allows you to align your personal or brand narrative with the current news cycle, making your contribution timely and indispensable.

Tailoring Your Narrative to Get Published in The Real Deal

Every publication has a specific “voice,” and TRD is known for being sharp, analytical, and occasionally irreverent. They excel at peeling back the curtain on the industry’s inner workings. If your goal is to get published in The Real Deal, avoid sterilized corporate jargon. Instead, offer a candid perspective on market challenges—such as the impact of fluctuating interest rates or the complexities of office-to-residential conversions.

The editorial team values three core pillars:

- Exclusivity: If you have a scoop, offering it to TRD first is a powerful incentive for coverage.

- Clarity: Get straight to the point of why your story matters to a national audience.

- Accuracy: In an era of misinformation, TRD prides itself on rigorous fact-checking. Ensuring your details are airtight is non-negotiable.

Final Thoughts

The path to media prominence in real estate is competitive, but for those who understand the nuances of the trade, it is entirely achievable. When you finally get published in The Real Deal, it marks a transition from being a mere participant in the market to being a leader of the global real estate conversation.

Business

Get Published in Mashable A Complete Guide to Securing High-Impact Tech Media Coverage

Published

7 days agoon

February 18, 2026

If your goal is to Get Published in Mashable, you’re aiming for one of the most recognized digital media platforms in the world. Known for covering technology, digital culture, startups, entertainment, and innovation, Mashable offers brands and entrepreneurs a powerful opportunity to gain global exposure. A feature here doesn’t just boost visibility—it strengthens credibility, builds authority, and positions you within influential digital conversations.

Whether you’re launching a startup, introducing new technology, or establishing yourself as a thought leader, understanding how to approach Mashable strategically can significantly increase your chances of success.

Why Mashable Is a Powerful Platform

Founded in 2005, Mashable has grown into a globally respected publication covering innovation, social media, business trends, gadgets, and cultural movements. Its audience includes tech enthusiasts, entrepreneurs, investors, and digitally connected consumers.

Being featured provides:

-

Global online visibility

-

Strong brand credibility

-

Enhanced investor confidence

-

Increased website traffic

-

SEO benefits from authoritative backlinks

Because Mashable focuses on digital innovation and cultural relevance, your story must offer real value—not just promotion.

What Mashable Editors Look For

Before pitching, it’s essential to understand the editorial mindset. Mashable prioritizes stories that are:

-

Innovative and forward-thinking

-

Data-driven or research-backed

-

Relevant to digital culture

-

Timely and newsworthy

-

Unique or disruptive

Editors receive countless pitches daily. To stand out, your story must provide a compelling angle that aligns with current trends or emerging technologies.

Step-by-Step Strategy to Get Published in Mashable

Securing coverage requires preparation and strategic positioning. Here’s how to approach the process effectively.

1. Develop a Newsworthy Angle

Mashable is not a promotional platform—it’s a news-driven publication. Ask yourself:

-

Is your product solving a major problem?

-

Does your startup offer a breakthrough innovation?

-

Is there data or research supporting your story?

-

Is your announcement tied to a trending topic?

Timing matters. Align your pitch with relevant industry developments to increase interest.

2. Build Credibility Before Pitching

Publications like Mashable are more likely to feature brands with existing traction. Strengthen your credibility by:

-

Gaining coverage in smaller media outlets

-

Growing your digital presence

-

Publishing thought leadership articles

-

Building an engaged online audience

Demonstrating authority and momentum makes your story more appealing.

3. Prepare a Compelling Media Kit

Your media kit should include:

-

A concise company overview

-

Founder bios

-

High-resolution images

-

Data or research highlights

-

Website and social media links

-

Previous press mentions

Professional presentation signals readiness for major media exposure.

4. Craft a Strong Pitch

When pitching, clarity and brevity are critical. Your email should:

-

Start with a compelling subject line

-

Introduce your story in one strong paragraph

-

Highlight why it matters now

-

Provide supporting data

-

Offer availability for interviews

Avoid exaggeration. Authenticity and factual accuracy are key to building trust with journalists.

5. Consider Strategic PR Support

Public relations professionals often have established relationships with media outlets. They understand editorial preferences and can position your story effectively. Working with experienced PR experts increases efficiency and improves your chances of securing meaningful placements.

Benefits of Getting Featured

When you successfully get published in Mashable, the impact can extend far beyond one article.

Increased Brand Authority

Being featured on a reputable tech platform elevates your credibility. Customers and investors view your brand as validated by a trusted media source.

Enhanced SEO Performance

High-authority backlinks from recognized publications can significantly improve search engine rankings.

Greater Investor and Partner Interest

Media validation often attracts venture capitalists, strategic partners, and collaboration opportunities.

Expanded Global Reach

Mashable’s international readership exposes your brand to audiences beyond your immediate market.

Common Mistakes to Avoid

Even strong brands can make errors when seeking media coverage.

Sending Generic Pitches

Mass emails lacking personalization are often ignored. Research the journalist’s recent articles and tailor your pitch accordingly.

Overly Promotional Language

Editors prioritize value for readers, not advertising copy. Focus on insight and impact.

Ignoring Timing

If your pitch isn’t tied to a relevant trend or news cycle, it may lack urgency.

Lack of Supporting Data

Claims without evidence reduce credibility. Provide measurable results, statistics, or proof points.

How to Strengthen Your Media Positioning

If you’re serious about securing coverage, adopt a long-term approach.

Establish Thought Leadership

Publish insightful content on LinkedIn, Medium, or industry blogs. Demonstrating expertise increases recognition.

Build Industry Relationships

Networking at tech events and conferences helps create media connections organically.

Leverage Social Proof

Customer testimonials, user growth numbers, and partnerships strengthen your brand story.

Stay Consistent

Media coverage often builds momentum. Each feature makes the next one easier to secure.

The Role of Timing in Media Success

Timing is one of the most overlooked factors in media outreach. Product launches, funding announcements, research studies, and industry events create natural news hooks. Aligning your pitch with these milestones significantly increases relevance.

Additionally, staying informed about current tech trends allows you to contribute expert commentary when journalists are seeking insights.

Beyond a Single Feature

While many brands focus on landing one high-profile placement, sustained media presence delivers stronger long-term benefits. Consistent storytelling across reputable platforms builds authority over time.

After a successful feature, amplify it across:

-

Social media channels

-

Email newsletters

-

Investor presentations

-

Website press sections

-

Marketing campaigns

This extends the value of your coverage and reinforces brand credibility.

Final Thoughts

To get published in Mashable is to position your brand at the forefront of digital innovation and cultural relevance. It requires strategic storytelling, newsworthy angles, professional presentation, and persistence. By focusing on authenticity, data-backed insights, and strong editorial alignment, you increase your chances of securing meaningful coverage. Media success doesn’t happen by chance—it’s built through preparation, credibility, and smart outreach.

Business

Get Published in Flaunt Magazine The Ultimate Guide to Elevating Your Brand

Published

7 days agoon

February 18, 2026

If you’re looking to Get Published in Flaunt Magazine, you’re aiming for more than just media coverage—you’re pursuing cultural credibility. Flaunt is known for blending fashion, art, film, music, and social commentary into visually striking editorial features. Being featured in this iconic publication positions your brand, personality, or creative project within a space that celebrates innovation and influence.

Whether you’re an entrepreneur, artist, model, designer, or public figure, securing a feature in Flaunt can significantly elevate your visibility and authority in creative industries. This guide explains how the process works and how to strategically position yourself for success.

Why Flaunt Magazine Matters

Founded in 1998, Flaunt Magazine has built a reputation as a trend-forward publication that spotlights culture shapers and boundary-pushing creatives. Its audience includes tastemakers, industry insiders, artists, and globally connected readers who value originality and bold storytelling.

Unlike traditional business magazines, Flaunt focuses on aesthetic storytelling and cultural depth. That means your feature must offer more than credentials—it must tell a compelling story that resonates with its artistic and socially aware audience.

Being featured in Flaunt can:

-

Strengthen your cultural credibility

-

Increase brand recognition

-

Enhance your public image

-

Open doors to collaborations and partnerships

-

Expand your reach in creative markets

Understanding What Flaunt Looks For

Before pitching your story, it’s important to understand the editorial style. Flaunt highlights:

-

Visionary creatives and innovators

-

Emerging and established artists

-

Fashion-forward entrepreneurs

-

Influential public figures

-

Cultural disruptors and thought leaders

Your story should align with themes of creativity, authenticity, and forward-thinking influence. Simply having a successful business may not be enough—the narrative must connect to art, culture, or meaningful impact.

Steps to Get Published in Flaunt Magazine

Securing a feature requires preparation, positioning, and strategic outreach. Below are the essential steps.

1. Craft a Strong Personal or Brand Narrative

Your story is your most powerful asset. Editors want depth—what drives you, what sets you apart, and how your work contributes to cultural conversations.

Ask yourself:

-

What makes your journey unique?

-

How does your work influence your industry?

-

What values or movements does your brand represent?

A well-defined narrative increases the likelihood of capturing editorial interest.

2. Build Credibility Before Pitching

Publications like Flaunt often feature individuals who already have a strong public presence. This includes:

-

Previous media coverage

-

Industry recognition

-

Social influence

-

High-quality branding and visuals

Establishing authority through smaller or niche publications can strengthen your pitch.

3. Prepare High-Quality Visual Assets

Flaunt is visually driven. Professional photography, strong creative direction, and a cohesive aesthetic are critical. Editorial-quality images make your pitch more appealing and demonstrate readiness for a feature.

4. Develop a Strategic Pitch

When reaching out to editors, your pitch should be concise, compelling, and relevant. Include:

-

A strong subject line

-

A brief introduction

-

Your unique angle

-

Links to past features or portfolios

-

Professional media assets

Avoid generic pitches. Tailor your message to align with Flaunt’s current themes or editorial direction.

5. Consider Professional PR Support

Working with experienced public relations professionals can increase your chances of success. PR experts understand how to position stories, build relationships with editors, and present your brand in a way that aligns with publication standards.

Benefits of Getting Featured

When you successfully get published in Flaunt Magazine, the impact goes beyond a single article.

Elevated Brand Authority

A respected cultural publication adds prestige to your portfolio. This recognition enhances how clients, collaborators, and investors perceive your brand.

Expanded Audience Reach

Flaunt’s readership includes creatives, influencers, and global audiences. A feature introduces your story to new networks that align with artistic and cultural influence.

Long-Term Credibility

Media features remain valuable assets for years. They can be showcased on websites, press kits, social profiles, and investor presentations.

Increased Collaboration Opportunities

Visibility in a culturally respected platform often attracts partnership opportunities from brands and fellow creatives.

Common Mistakes to Avoid

Many aspiring contributors make errors that reduce their chances of being featured.

Pitching Without a Clear Angle

A vague or overly promotional pitch will likely be ignored. Focus on storytelling rather than sales.

Ignoring Editorial Style

Failing to research the publication’s voice and audience can weaken your proposal.

Submitting Low-Quality Visuals

Professional publications expect professional standards. Invest in strong imagery and presentation.

Being Impatient

Editorial calendars can be planned months in advance. Persistence and professionalism are essential.

Positioning Yourself for Long-Term Media Success

Even if your first attempt isn’t successful, the journey to get published in Flaunt Magazine builds valuable experience. Continue strengthening your brand presence, expanding your media portfolio, and refining your narrative.

Media success is cumulative. Each feature enhances credibility, making future placements easier to secure. Consistent branding, strong storytelling, and strategic outreach create momentum over time.

Final Thoughts

To get published in Flaunt Magazine is to align your brand with a publication that celebrates culture, creativity, and innovation. It requires preparation, authenticity, and a compelling story that resonates with its audience.

By developing a strong narrative, building credibility, presenting high-quality visuals, and approaching outreach strategically, you significantly increase your chances of securing a feature. Whether you’re an artist, entrepreneur, or creative visionary, the right positioning can transform your media presence and open doors to meaningful opportunities.

Business

Who Really Pays for Luxury? Inside the Lives of India’s Artisans and the Fight for Fairness by Couture Brand Aksstagga

Published

7 days agoon

February 18, 2026

The Indian fashion and clothing industry has enamored the world for centuries, and currently, India holds a 63% share of the global textile and garment market. However, there is a side to fashion that rarely makes it into lookbooks or glossy campaigns. We all talk about big fashion houses and designers, but we almost never talk about the most important section of the industry: the hands that make the clothes—the artisans.

Across India, thousands of artisans spend hours stitching, embroidering, dyeing, and finishing garments that eventually sell for prices they could never afford themselves. Traditional embroidery techniques like Chikankari and Zardozi require hundreds of hours per garment, depending on complexity, because each stitch is done by hand and cannot be rushed. A single lehenga panel can take nearly a month to complete, demanding patience, strength, and extraordinary precision. Many home-based artisans work irregular hours around household duties, which means their embroidery work often extends late into the night. Their labour sustains the industry. And yet, despite forming the backbone of India’s luxury and couture ecosystem, their houses remain underpaid, and their contribution remains hidden behind garments that travel far beyond the neighbourhoods where they were made.

This is not a problem limited to one craft or one region. Nor is it unique to a handful of brands. The uncomfortable truth is that much of the fashion ecosystem, luxury included, has been built on structures that vastly undervalue this unfathomable labour that the artisans provide to bring the designers’ art to life. Low wagesare justified by “market rates.” Long hours are absorbed into informal systems. Middle layers that dilute payment before it reaches the hands that actually do the work. These practices are normalised so deeply that they are rarely questioned, let alone challenged.

Anjali Singh Goel, founder and creative director of couture brand Aksstagga, knows this world intimately. Being in the garment industry for more than two decades before starting Aksstagga, she has navigated these networks herself, moving from center to center, home to home, trying to understand how work flowed and where it stopped. What she discovered over time was not only a lack of fairness but also corruption deeply ingrained in the industry culture.

“Boutiques and fashion houses often assume artisans are being paid because money is leaving the brand,” she says. “But somewhere between the brand and the worker, it gets stuck. And the person doing the work never sees what they deserve.”

In many cases, women artisans are restricted by geography and circumstance. Travel is not always an option. Work has to happen within their neighbourhoods, often inside their homes. This decentralized system is not something brands created; it exists because it has to. However, what brands do control is how they engage with it. And that is where most fail. Because work happens out of sight, it becomes easier to look away. Timelines remain rigid. Payments remain inconsistent. The burden of adjustment falls entirely on the artisan. When delays happen, they are blamed. When quality is exceptional, it is taken for granted.

Aksstagga operates within this same reality but refuses to treat it as inevitable. Anjali believes artists can only produce superior quality art when they can work unburdened from the stress of livelihoods, when their minds are free to be creative. She insists on paying among the highest rates she can sustain, even when margins tighten. She actively seeks out trustworthy intermediaries and managers, knowing that removing middle layers altogether is not always practical, but monitoring them is essential.

Moreover, at Aksstagga, timelines are shaped around real lives rather than factory schedules. Many artisans the brand works with are widows or primary earners, supporting entire households through their craft. So, Aksstagga makes sure that their work is flexible enough to fit around caregiving, health, and domestic responsibilities.

Equally important is what Aksstagga does not do. It does not take shortcuts to cut costs, such as using machines for the majority of the stitching and calling the cloth “handcrafted,” as many other brands do. Every single stitch on an Aksstagga design is crafted by hand and done by artisans employed by the company. It also does not pressure artisans to meet impossible targets in the name of speed. These choices may seem bare minimum, but unfortunately, most brands in the market don’t treat them as such.

“There is a lot that happens in this industry that people don’t want to talk about,” Anjali exclaims. “But pretending it doesn’t exist doesn’t protect anyone.”

Anjali is also clear that long-term change cannot come from brands alone. Systemic change requires government involvement, training centers, social security, fair wage benchmarks, insurance, and formal recognition of artisans as workers rather than informal labor. Initiatives like ODOP, which recognize Chikankari as a defining craft of Lucknow, are steps forward, but they need deeper implementation.

“If we want this craft to survive,” Anjali says, “the people behind it need more than appreciation; they need protection from exploitation.”

Real change, Anjali believes, is rarely glamorous. It happens through difficult conversations, honest accounting, and daily choices that are easy to compromise and harder to uphold. By centering artisans within its ethics and building systems that acknowledge their reality, Aksstagga offers a vision of luxury that holds everyone from designers to policymakers accountable.

Business

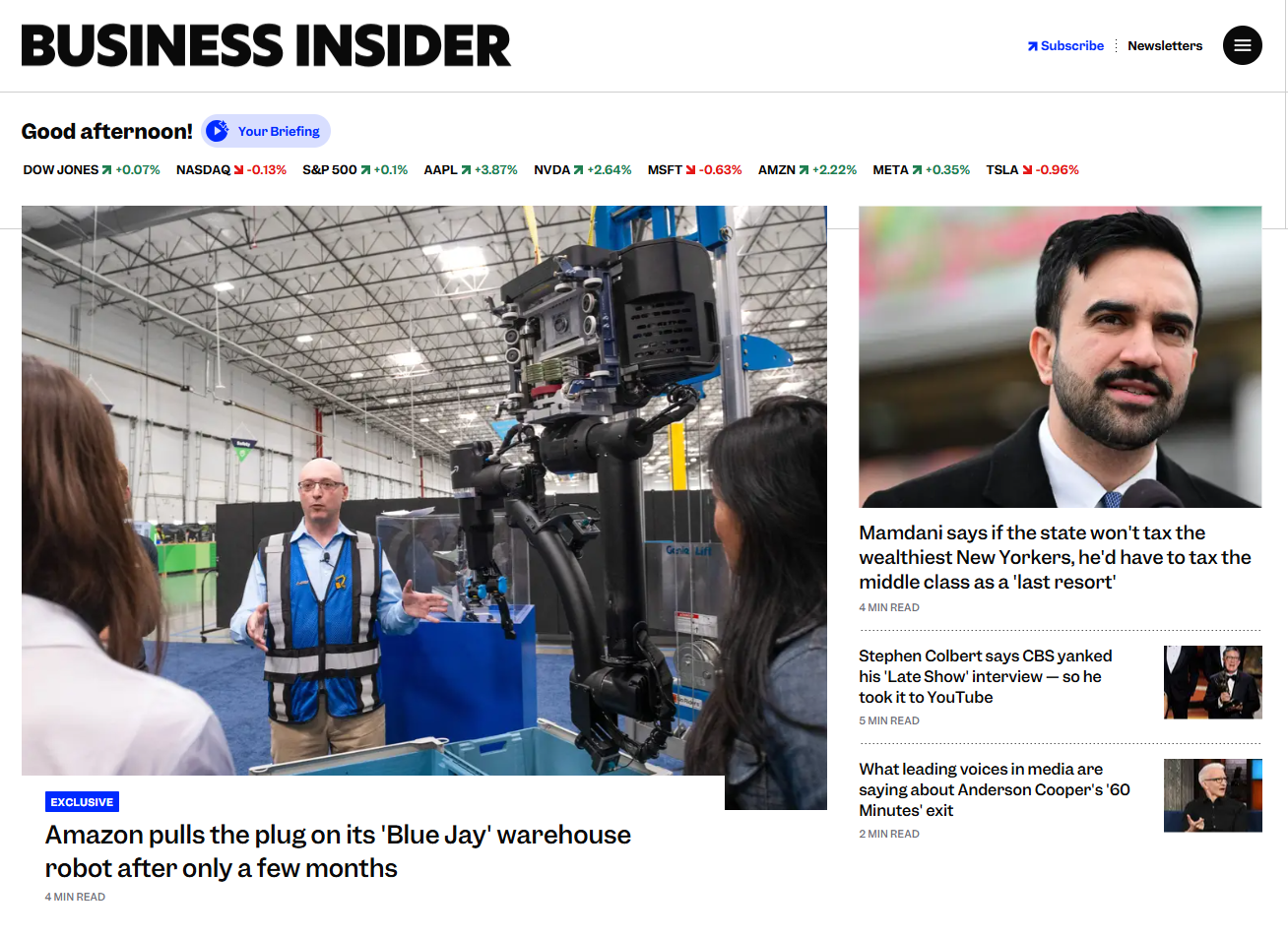

How to Get Featured in Business Insider Magazine and Boost Your Brand

Published

7 days agoon

February 18, 2026

In today’s competitive business world, visibility is everything. Entrepreneurs, startups, and even established brands are constantly looking for ways to amplify their presence. One of the most effective ways to do this is by getting media coverage in top-tier publications. Among these, Business Insider Magazine stands out as a credible platform that can elevate your brand to a global audience. This guide will walk you through how to get featured in Business Insider Magazine, why it matters, and strategies to increase your chances of being noticed.

Why You Should Aim to Get Featured in Business Insider Magazine

Before diving into the “how,” it’s important to understand the “why.” Business Insider Magazine is renowned for its focus on business trends, technology, finance, and entrepreneurship. Being featured in such a publication does more than give you credibility—it opens doors to new opportunities.

Here’s what being featured can do for your business:

- Increase Brand Authority – Being mentioned in a respected outlet instantly boosts trust among your target audience.

- Drive Website Traffic – A high-authority backlink from Business Insider can increase your site’s traffic and improve SEO.

- Attract Investors and Partners – Investors often look at media coverage as a sign of legitimacy.

- Reach a Global Audience – Business Insider has millions of readers worldwide, exposing your brand to new markets.

By understanding these benefits, you can see why so many companies strive to get featured in Business Insider Magazine.

Steps to Get Featured in Business Insider Magazine

Getting media coverage isn’t just about sending an email and hoping for the best. It requires a strategic approach. Here are some steps to increase your chances of being featured.

- Build a Strong Brand Presence

Before you pitch to any publication, your brand needs to stand out. This involves:

- Professional Website: Ensure your website is polished, easy to navigate, and has clear information about your products or services.

- Active Social Media: Engage consistently on LinkedIn, Twitter, and Instagram, as journalists often research social media activity before featuring a brand.

- Content Marketing: Publish insightful blog posts or thought leadership articles that demonstrate your expertise.

A strong digital footprint makes it easier for journalists to justify featuring your brand, and it increases your credibility, which is essential if you want to get featured in Business Insider Magazine.

- Identify Relevant Journalists and Editors

Business Insider has different sections ranging from tech and finance to lifestyle and leadership. To improve your chances:

- Research journalists who cover your industry.

- Follow them on social media to understand the topics they write about.

- Engage with their content by commenting and sharing insights.

By targeting the right editor or journalist, you can tailor your pitch and increase the likelihood of getting featured.

- Craft a Compelling Pitch

Your pitch is your first impression, so make it count. A compelling pitch should:

- Be concise and engaging. Journalists receive hundreds of pitches daily, so clarity is key.

- Highlight your unique story. What makes your brand different? Why is it newsworthy?

- Include supporting data or results. Numbers, case studies, or testimonials can make your story more persuasive.

Remember, the goal is to get featured in Business Insider Magazine, so your pitch should focus on what will interest their audience rather than simply promoting your product.

- Leverage PR Agencies or Media Platforms

If pitching directly feels daunting, working with PR professionals can be highly effective. PR agencies often have established relationships with media outlets, including Business Insider Magazine. They can help you:

- Develop a media strategy

- Craft a professional press release

- Connect with journalists directly

Investing in PR can significantly increase your chances of getting noticed by top-tier publications.

- Showcase Your Expertise Through Thought Leadership

Business Insider often features industry experts who provide insights on trends, innovations, or market developments. Position yourself as a thought leader by:

- Writing insightful articles for your own blog or LinkedIn

- Participating in industry webinars or podcasts

- Sharing original research or case studies

When you demonstrate your expertise, you naturally increase your chances to get featured in Business Insider Magazine.

Common Mistakes to Avoid When Trying to Get Featured in Business Insider Magazine

While it’s exciting to aim for media coverage, several pitfalls can reduce your chances:

- Generic Pitches: Avoid mass emailing journalists with a one-size-fits-all pitch. Personalized outreach is far more effective.

- Overly Promotional Content: Business Insider prefers stories that provide value, not direct advertisements. Focus on insights and newsworthiness.

- Ignoring Follow-ups: If you don’t get a response, a polite follow-up can make a difference. Persistence is key, but avoid being pushy.

By steering clear of these mistakes, your strategy to get featured in Business Insider Magazine will be much more effective.

Real-Life Examples of Brands That Benefited from Media Coverage

Seeing others succeed can be motivating. Brands that have been featured in Business Insider often experience a surge in visibility and credibility. For example:

- A tech startup shared its innovative solution in a feature article, leading to investor interest and a funding round.

- A lifestyle brand highlighted its sustainability efforts, which led to partnerships with eco-conscious retailers.

These examples demonstrate the tangible impact that being featured in a high-profile publication can have on your growth trajectory.

Tips to Maintain Long-Term Media Presence

Getting featured is just the first step. Maintaining your media presence ensures continued visibility:

- Consistently Share Updates: Keep journalists informed about new products, milestones, or industry insights.

- Engage With Media Coverage: Share your feature on social media and your website to amplify the reach.

- Network With Industry Experts: Build relationships with journalists and editors for future opportunities.

Sustained efforts increase your credibility and make it easier to get featured in Business Insider Magazine again in the future.

Conclusion

If you’re serious about growing your brand, aiming to get featured in Business Insider Magazine should be part of your strategy. It’s not just about recognition—it’s about credibility, audience growth, and business opportunities. By building a strong brand, targeting the right journalists, crafting compelling pitches, and positioning yourself as a thought leader, you can significantly improve your chances of being noticed.

Remember, media coverage is both an art and a strategy. With patience, persistence, and a well-crafted plan, you can secure a feature in one of the world’s most respected business publications and take your brand to new heights.

Business

How to Get Featured in People Magazine: A Complete, Real-World Guide

Published

1 week agoon

February 16, 2026

For entrepreneurs, authors, public figures, and changemakers, national media coverage is more than a vanity milestone—it’s a credibility accelerator. And when it comes to mainstream recognition, few platforms carry the cultural authority and reach of People Magazine.

To Get Featured in People Magazine is to instantly elevate your story in the eyes of millions. It signals trust. It builds influence. It opens doors that were previously closed.

But here’s the truth: it doesn’t happen by accident.

If you’ve ever wondered how to position yourself strategically and professionally to get national attention, this guide walks you through exactly what it takes to Get Featured in People Magazine, without hype or unrealistic promises.

Why So Many Brands Want to Get Featured in People Magazine

There’s a reason this publication remains one of the most recognizable media brands in America. With decades of trusted storytelling, celebrity coverage, and human-interest features, it has built a reputation for highlighting compelling journeys.

When you Get Featured in People Magazine, several things happen almost instantly:

- Your credibility increases overnight

- Your Google search results strengthen

- Social proof expands dramatically

- Speaking and partnership opportunities grow

- Brand trust accelerates

In today’s crowded digital world, trust is currency. A respected national feature gives you third-party validation that advertising simply cannot buy.

What It Really Takes to Get Featured in People Magazine

Let’s clear up a common misconception: editors are not looking for self-promotion. They’re looking for stories that resonate with their audience.

If you want to Get Featured in People Magazine, your story must check at least one of these boxes:

- Emotionally compelling

- Timely and relevant

- Culturally significant

- Inspirational or transformational

- Connected to a trending topic

The publication centers around people—not products. Even if you run a business, the human journey behind it matters more than revenue numbers.

Crafting a Pitch That Helps You Get Featured in People Magazine

A strong pitch is concise, clear, and emotionally engaging.

Here’s a simple structure you can follow:

- A Compelling Subject Line

Think like a headline writer. Make it intriguing, but truthful.

Example:

Former Teacher Builds National Nonprofit After Personal Loss

- A Powerful Opening Paragraph

In 3–5 sentences, summarize the heart of your story and why it matters now.

- The Human Angle

Explain the transformation, struggle, breakthrough, or impact.

- Why It Fits Their Audience

Show alignment with the type of stories they publish.

- Supporting Assets

Include professional photos, social links, previous media mentions, and contact information.

If your goal is to Get Featured in People Magazine, your pitch should feel like the beginning of a great article—not a sales email.

Build Authority Before You Try to Get Featured in People Magazine

Major publications rarely spotlight someone with zero digital footprint. Before pursuing national coverage, strengthen your foundation.

Here’s how:

- Appear on niche podcasts

- Publish guest articles

- Get quoted in industry blogs

- Grow a consistent social presence

- Clarify your brand message

Momentum attracts media. When editors see that others are already talking about you, it reduces perceived risk.

If you want to Get Featured in People Magazine, visibility at smaller levels often acts as a stepping stone.

Create a Newsworthy Moment

Sometimes the missing piece isn’t your story—it’s timing.

To improve your chances to Get Featured in People Magazine, consider creating a media hook:

- Launch a new initiative

- Partner with a recognizable brand

- Publish a book

- Host a large-scale event

- Announce research or data findings

- Tie your story to a national awareness month

Journalists respond to relevance. A fresh angle dramatically increases the likelihood of a response.

Using Public Relations to Get Featured in People Magazine

While it’s possible to pitch yourself, many individuals choose to work with experienced publicists. A skilled PR professional understands editorial calendars, relationships, and positioning.

They can help you:

- Refine your story angle

- Identify the right editor

- Pitch strategically

- Follow up professionally

- Prepare for interviews

If your budget allows, this can be a worthwhile investment. However, representation alone does not guarantee you will Get Featured in People Magazine. The story must still stand on its own.

Optimize Your Online Presence Before You Get Featured in People Magazine

Imagine an editor loves your pitch. The first thing they’ll do is research you.

What will they find?

Before seriously pursuing efforts to Get Featured in People Magazine, ensure you have:

- A professional website

- A polished bio

- Updated headshots

- Consistent social media branding

- Clear messaging about your impact

Your digital presence should reinforce your credibility, not raise questions.

Timing and Editorial Calendars Matter

Many national publications plan content months in advance. Understanding seasonal trends can give you a major advantage.

If you want to Get Featured in People Magazine, research:

- Holiday-themed issues

- Annual special editions

- Cultural heritage months

- Health and wellness seasons

- Awards cycles

Pitching at the right moment can make the difference between silence and serious consideration.

Persistence Is Part of the Process

Rejection—or no response at all—is normal in media outreach.

Even highly accomplished individuals pitch multiple times before they successfully Get Featured in People Magazine. Sometimes the angle needs refining. Sometimes the timing is off. Sometimes the inbox is simply full.

Follow up once or twice politely. If you don’t hear back, revisit your positioning and try again later with a stronger hook.

Professional persistence signals confidence—not desperation.

What Happens After You Get Featured in People Magazine?

Preparation matters just as much as the feature itself.

If you do Get Featured in People Magazine, maximize the exposure:

- Add the feature to your website press page

- Share it across social platforms

- Include it in email marketing

- Update your media bio

- Reference it in speaking pitches

National visibility is a powerful tool—but only if you leverage it properly.

The Mindset Required to Get Featured in People Magazine

Perhaps the most overlooked element is mindset.

Media recognition should be approached as a long-term strategy, not a quick win. Focus on impact, service, and storytelling—not ego.

People respond to authenticity. Editors can sense manufactured narratives instantly. The most powerful way to Get Featured in People Magazine is to share a story that is honest, vulnerable, and relevant.

When your work genuinely helps others, media coverage becomes a natural extension of that impact.

Final Thoughts: Is It Possible to Get Featured in People Magazine?

To Get Featured in People Magazine, you must think like both a storyteller and a strategist. Build credibility. Create relevance. Craft compelling pitches. Stay consistent.

National recognition isn’t reserved for celebrities alone. Entrepreneurs, advocates, small business owners, and everyday individuals with extraordinary journeys are featured every year.

The real question isn’t whether it’s possible.

The real question is:

Are you ready to position your story in a way that millions of readers can connect with?

Start refining your narrative today. With the right strategy and sustained effort, you can absolutely Get Featured in People Magazine—and use that spotlight to amplify the impact you’re here to make.

Business

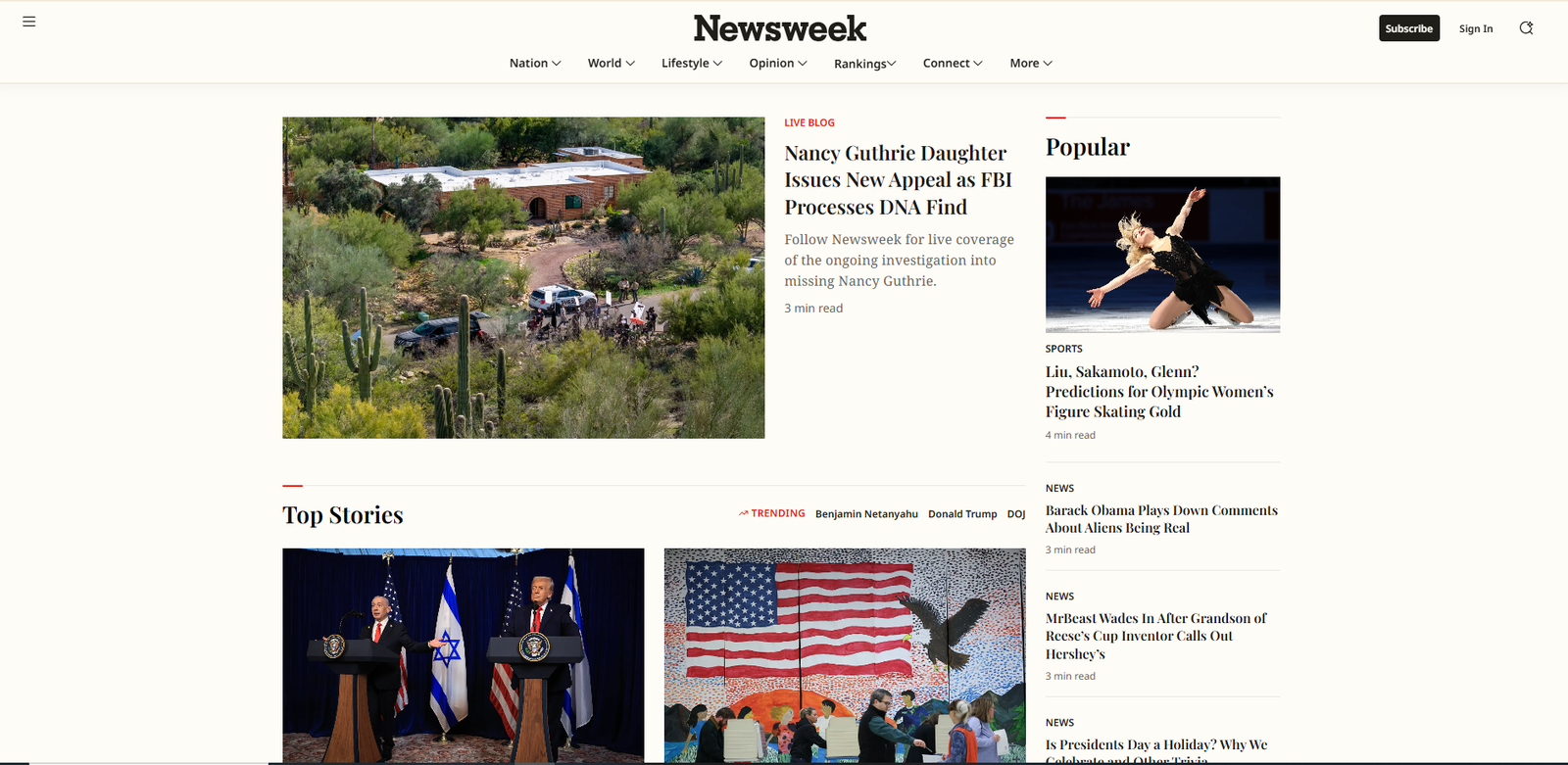

How to Get Featured in Newsweek: A Complete Guide for Brands and Entrepreneurs

Published

1 week agoon

February 16, 2026

Many entrepreneurs, founders, and personal brands share one major PR goal: to Get Featured in Newsweek. Being highlighted in a globally recognized publication instantly elevates your credibility, authority, and visibility. But while the idea sounds glamorous, the path to earning that spotlight requires strategy, positioning, and patience.

If you’re serious about growing your reputation and reaching a wider audience, this guide will walk you through exactly what it takes.

Why Brands Want to Get Featured in Newsweek

There’s a reason ambitious professionals aim to Get Featured in Newsweek. Media exposure at this level acts as a powerful trust signal. When your name or company appears in a respected publication, people naturally perceive you as more authoritative and credible.

Here’s what that kind of feature can do for you:

- Strengthen your personal or corporate brand

- Increase inbound opportunities

- Build investor confidence

- Improve conversion rates

- Open doors to speaking engagements and partnerships

In today’s competitive digital landscape, attention is currency. High-level press coverage separates industry leaders from everyone else.

However, major publications don’t feature brands randomly. They tell stories that matter to their audience. That’s an important distinction many people overlook.

What It Really Takes to Get Featured in Newsweek

If your only strategy is sending a generic press release, it’s unlikely you’ll Get Featured in Newsweek. Editors and contributors receive hundreds—sometimes thousands—of pitches every week. To stand out, you need more than ambition. You need a compelling angle.

Here’s what truly matters:

- A Newsworthy Story

Publications don’t advertise businesses for free. They cover stories that are timely, relevant, and impactful. Ask yourself:

- Are you launching something innovative?

- Do you have unique data or research?

- Have you achieved something unusual or groundbreaking?

- Is your journey inspiring or socially relevant?

Your story must align with current trends or ongoing conversations in your industry.

- Clear Positioning

Journalists look for experts, not generalists. If you want to position yourself to Get Featured in Newsweek, you must clearly define your niche. The more specific your expertise, the easier it is for writers to frame your story.

For example:

- Not just “business coach” — but “leadership coach helping tech founders scale remote teams.”

- Not just “fitness trainer” — but “trainer specializing in metabolic recovery for busy executives.”

Specificity creates authority.

- Credibility Signals

Before featuring you, writers often verify your legitimacy. This includes:

- Professional website

- Media kit

- Social proof

- Prior press mentions

- Client testimonials

- Clear brand messaging

Your online presence should reinforce the story you’re pitching.

Proven Strategies to Get Featured in Newsweek

Now let’s talk about actionable steps. If your goal is to Get Featured in Newsweek, you need a proactive approach rather than wishful thinking.

Build Relationships, Not Just Pitches

One of the most effective ways to help you Get Featured in Newsweek is relationship-building. Follow journalists and contributors in your niche. Engage thoughtfully with their content. Understand the type of stories they write.

When you finally pitch, it won’t feel cold—it will feel relevant.

Craft a Strong Pitch

A strong pitch is concise, personalized, and value-driven. It should include:

- A compelling subject line

- A brief introduction

- A clear angle

- Why the story matters now

- Supporting data or proof

- Contact information

Avoid long-winded explanations. Editors appreciate clarity and directness.

Leverage Expert Commentary

Sometimes the fastest way to increase your chances to Get Featured in Newsweek is by offering expert commentary on trending topics. When major news breaks in your industry, position yourself as a source.

You can do this by:

- Publishing thought leadership on LinkedIn

- Responding quickly to media queries

- Providing unique insights backed by data

Timeliness is critical. News moves fast.

Use Strategic PR Support

If your budget allows, working with a PR professional can significantly streamline the process. Experienced publicists understand editorial standards, pitch formatting, and media timelines. They can refine your narrative and connect you with the right contributors.

While hiring help doesn’t guarantee coverage, it can increase efficiency and professionalism in your outreach.

Mistakes to Avoid When You Want to Get Featured in Newsweek

Even strong brands make errors that sabotage their goal to Get Featured in Newsweek. Avoid these common pitfalls:

Being Too Promotional

Editors are not looking for advertisements. If your pitch reads like a sales page, it will likely be ignored. Focus on storytelling, impact, and insights rather than features and pricing.

Ignoring Timing

Pitching holiday content in January or trend-based stories months too late reduces your relevance. Study editorial calendars and stay aware of what’s currently happening in your industry.

Sending Mass Emails

Generic, copy-paste emails are easy to spot. Personalization significantly increases response rates. Reference a recent article or explain why your story aligns with the writer’s beat.

Lacking a Clear Narrative

If your story doesn’t have a strong central theme, it becomes difficult to frame into an engaging article. Before pitching, summarize your idea in one powerful sentence. If it’s confusing, refine it.

Building Long-Term Media Visibility

While many people focus on a single big feature, sustainable media presence is more powerful. Consistent visibility builds authority over time.

To maintain momentum:

- Continue producing thought leadership content

- Strengthen your industry network

- Collect testimonials and case studies

- Document milestones and achievements

- Stay active in relevant conversations

Media recognition is often the result of cumulative credibility rather than one viral moment.

The Mindset Behind Media Success

If you truly want to Get Featured in Newsweek, understand that persistence plays a major role. Rejection is common. Silence is common. But so is eventual success for those who refine their approach.

Instead of asking, “Why haven’t I been featured yet?” ask:

- Is my story strong enough?

- Am I targeting the right angle?

- Am I delivering value to the publication’s audience?

- Have I built enough authority in my niche?

Media coverage is earned through positioning, preparation, and professionalism.

Final Thoughts

To Get Featured in Newsweek, you need more than a dream—you need strategy. Focus on crafting a compelling narrative, building real credibility, and approaching journalists with respect and clarity.

When you combine expertise, timing, and strong storytelling, media opportunities become far more accessible. Whether you pursue PR independently or with professional support, remember that high-level exposure is the result of intentional brand building.

Stay consistent. Refine your message. Lead with value.

And when the right story meets the right moment, your feature becomes not just possible—but inevitable.

Trending

-

Health5 years ago

Health5 years agoEva Savagiou Finally Breaks Her Silence About Online Bullying On TikTok

-

Health4 years ago

Health4 years agoTraumatone Returns With A New EP – Hereafter

-

Health4 years ago

Health4 years agoTop 5 Influencers Accounts To Watch In 2022

-

Fashion5 years ago

Fashion5 years agoThe Tattoo Heretic: Kirby van Beek’s Idea Of Shadow And Bone

-

Fashion5 years ago

Fashion5 years agoNatalie Schramboeck – Influencing People Through A Cultural Touch

-

Fashion9 years ago

Fashion9 years ago9 Celebrities who have spoken out about being photoshopped

-

Health5 years ago

Health5 years agoBrooke Casey Inspiring People Through Her Message With Music

-

Health5 years ago

Health5 years agoTop 12 Rising Artists To Watch In 2021

-

Health5 years ago

Health5 years agoMadison Morton Is Swooning The World Through Her Soul-stirring Music

-

Health4 years ago

Health4 years agoTop 10 Influencers To Follow This 2021

Pingback: Autonomous Driving in Question as Tesla Recall Two Million Vehicles - Level Up Magazine