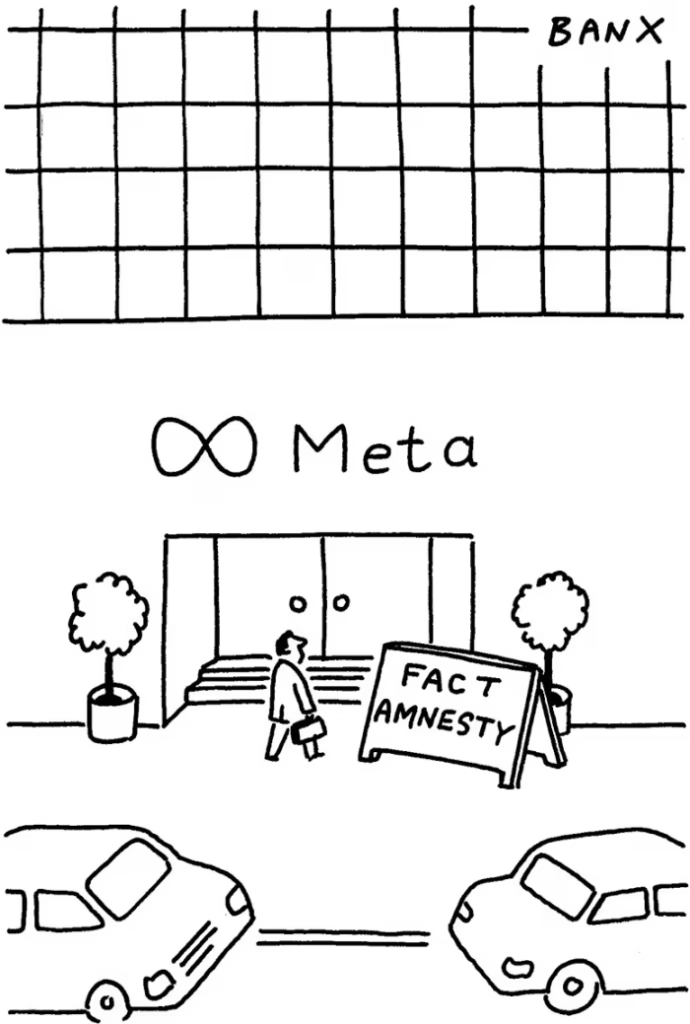

Mark Zuckerberg’s recent decision to end Meta’s fact-checking program in favour of a community-driven “Community Notes” system has sparked significant debate and concern. Announced on January 7, 2025, the shift marks a pivotal moment in Meta’s content moderation strategy, as Zuckerberg emphasises free speech, simplification of policies, and reducing censorship mistakes. This transition comes at a time when the company is reassessing its approach in light of the evolving political and social climate. The new system will replace the established fact-checking process, which had relied on third-party organisations to review and flag misinformation, raising concerns that this move might lead to the unchecked spread of false information.

The Decision Reflects Broader Shifts in Content Moderation Systems

Meta‘s decision is framed within a broader trend of social media companies reevaluating their content moderation systems. For years, fact-checking organisations certified by networks like the International Fact-Checking Network reviewed content, labelling it as true or false, and sometimes limiting its spread if misinformation was identified. Meta’s fact-checking program, which was implemented in 2016, was based on collaboration with over 90 independent organisations that identified potentially misleading content. However, Zuckerberg’s announcement marks a departure from this approach, citing the growing complexity and mistakes inherent in the current systems as a reason for change. Despite this, the company promises to continue focusing on severe violations, including issues related to terrorism, child exploitation, and drugs.

Relaxation of Content Policies and Focus on High-Severity Violations

Alongside the end of the fact-checking program, Meta will also relax content policies around political and social topics such as immigration and gender. Automated moderation will be refocused on “high-severity” violations, while users will be encouraged to report other infractions. Zuckerberg stated that the company’s trust and safety team would relocate from California to Texas, further emphasising the shift in Meta’s approach to content moderation. This move signals a reversal of previous policies that aimed to limit political content, which Zuckerberg explained was driven by feedback that political posts were causing user distress. Meta now plans to reintroduce more political content on its platforms, including Facebook, Instagram, and Threads, albeit with the goal of fostering a positive environment.

Heightened Scrutiny from Political Figures and Polarization of Content Moderation

The changes come at a time when Meta, like many other tech companies, is navigating heightened scrutiny from political figures, especially Republicans. Critics from this camp have long argued that Meta’s fact-checking system was biased and disproportionately favoured left-leaning views. This contention, while debated, underscores the growing politicisation of content moderation. In contrast, many conservatives have celebrated X’s community notes system, which allows users to participate directly in moderating content. However, some worry that this model, while fostering community involvement, could lead to the amplification of echo chambers and the unchecked spread of misinformation, particularly when content moderation is left to the users without professional oversight.

Zuckerberg Critics Government Censorship and Advocates for Free Speech

Zuckerberg’s statement also reflects broader concerns about the role of social media in influencing public discourse. He criticised government and media entities for pushing to censor content, pointing to the election as a cultural tipping point that necessitated a rethinking of how speech is moderated. The move toward user-driven moderation aligns with the ongoing debate over the role of tech companies in regulating online speech. This is compounded by the global context in which various governments have sought to impose stricter regulations on online content, making it harder for U.S.-based companies like Meta to navigate international censorship pressures.

AI and Neurotechnology: The New Frontiers in Communication

While the immediate implications of Meta’s shift in content moderation policies are being debated, a much larger challenge is emerging on the horizon: the intersection of artificial intelligence (AI) and neurotechnology. As AI technologies like OpenAI’s ChatGPT and Google’s Gemini become more advanced, they are increasingly able to generate human-like content that blurs the lines between human and machine communication. This has profound implications for how information is disseminated and consumed. AI’s ability to produce realistic, contextually relevant text raises concerns about accountability and the potential for misuse, such as spreading disinformation.

The Growing Intersection of AI and Neurotechnology

The rise of neurotechnology, which seeks to understand and even interact with the human brain, adds another layer of complexity to the issue. Companies like Meta are investing in neurotechnology alongside AI, raising questions about the ethical use of brain data and how it might be exploited. As these technologies advance, they could fundamentally change how we think about communication, truth, and privacy. AI’s ability to simulate human thought patterns, coupled with neurotechnology’s potential to read or influence brain activity, could lead to significant ethical dilemmas, including the manipulation of public opinion and the erosion of personal autonomy.

The Need for Ethical Governance in the Age of AI and Neurotechnology

In light of these developments, critics argue that the real threat to free speech and trust in communication lies not only in changes to content moderation policies but also in the rise of technologies that could alter the very nature of human communication. The lack of regulations surrounding AI and neurotechnology could exacerbate issues of misinformation, manipulation, and privacy violations. As Meta and other tech companies navigate this rapidly changing landscape, the need for strong governance and international cooperation has never been more pressing.

The Future of Digital Communication: Balancing Innovation and Ethical Responsibility

The debate surrounding Meta’s decision to end its fact-checking program is just the tip of the iceberg in the broader conversation about the future of online communication. With the rise of AI and neurotechnology, it is essential to consider not only how content is moderated but also how emerging technologies are shaping the very fabric of our digital interactions. As society grapples with these challenges, finding a balance between innovation and ethical responsibility will be crucial to safeguarding truth, trust, and privacy in the digital age.